“Robot assistants” project aims to reinvent construction industry

$2M project aims to partner humans with robots for safer jobsites.

$2M project aims to partner humans with robots for safer jobsites.

With the aim of enabling robots to learn from human partners on construction sites, the National Science Foundation is providing $2 million to a University of Michigan-led research team.

Robots are anticipated to make the global construction industry safer and more attractive to workers, easing a worker shortage in the U.S.

For decades, construction has been one of the most dangerous and least efficient human endeavors. It lags far behind other parts of the economy in productivity and struggles to attract workers to jobs that are often perceived as backbreaking. In collaboration with the University of Florida and Washington State University, researchers from U-M’s College of Engineering and Taubman College of Architecture and Urban Planning hope to change that.

Carol Menassa, the lead principal investigator of the research team and an associate professor of civil and environmental engineering at U-M, explains that using automation and robotics on construction sites is critical if construction is to benefit from the productivity gains that have reshaped other industries, like manufacturing.

“Construction is much more dynamic and unpredictable than an environment like a factory, so we’re working to redefine the balance between human and robot workers,” she said. “Humans and robots need to coexist, and that’s the premise of what we’re doing right now.”

The three-year project will pair humans with “interactive robot assistants” that can learn from humans through watching and listening—much like human apprentices would. The robots could eventually make construction work less dangerous and strenuous for humans while still enabling us to call the shots and solve problems.

By the end of the project, the team plans to deliver a machine learning system that will enable that learning through natural interaction, as well as a series of freely available educational tools that will train human workers to use those systems effectively. The project also includes outreach to K-12 schools to build awareness and interest in the new opportunities presented by a revamped construction field.

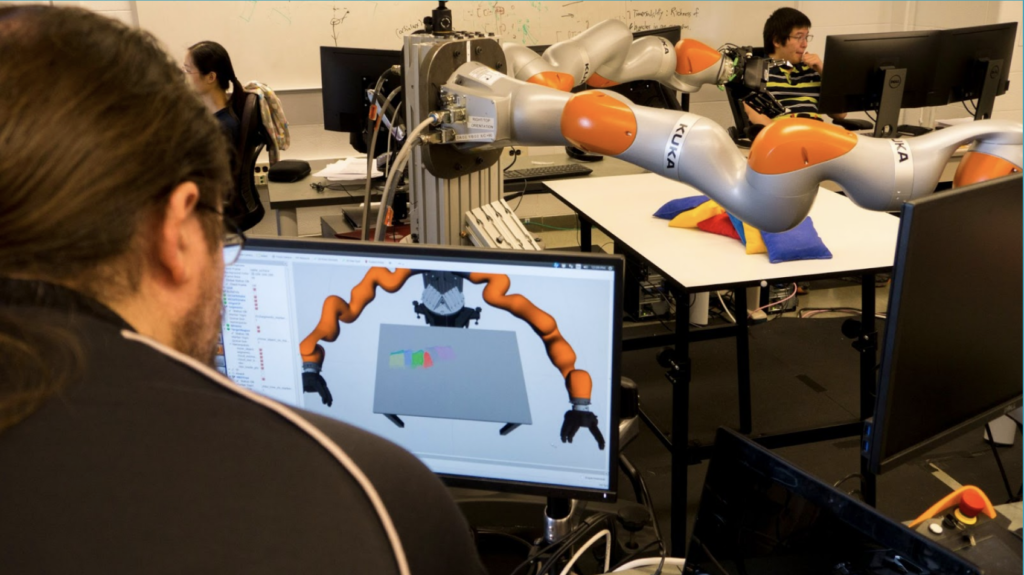

In this vision for the future, robots would do physically strenuous tasks like lifting bricks or moving sheets of drywall, for example, while humans could figure out the best way to do a particular task or make adjustments when the built structure needs to deviate from the original plan. The research team has already developed an experimental system that enables humans and robots to collaborate on simple tasks like placing drywall panels and ceiling tiles.

“Besides the direct benefits to the construction industry, this research has the potential to have a broader impact on our built environment,” said Arash Adel, a co-principal investigator on the project and an assistant professor at U-M’s A. Alfred Taubman College of Architecture and Urban Planning.

“By taking advantage of the capabilities of the robots, such as their precision for performing construction tasks according to the digital blueprint of the building and their ability to perform nonstandard assembly procedures, this research might open up opportunities for the feasible construction of high-quality novel architectures that are too expensive or not feasible entirely with current construction practices.”

The system uses a virtual-reality copy of both the jobsite and the robot (called a real-time, process level Digital Twin), which the human operator interacts with through an Oculus-style VR headset, seeing a video game-like clone of the workspace. Using a joystick-style controller and a pointer, the human shows the system what needs to be done, for example, picking up a sheet of drywall and aligning it on the studded wall. Based on these instructions, the robot devises the most efficient way to make it happen and develops a sequence of actions called a motion plan. It then demonstrates its plan to the operator on-screen in the virtual copy of the jobsite.

At this point, it’s up to the human to determine whether the proposed plan will get the job done. They can either approve the plan as-is, make changes or demand a completely new plan. When the plan is agreed upon, the human tells the robot to make it happen, and then watches in near-real time as the actual robot does the work. The machine-learning component will enable the robot to remember corrections made by the human partner so that the next drywall panel goes up faster.

The team is collaborating with industry partners, including Michigan firm Barton Malow. Barton Malow already uses simple robots that mortar and place bricks in the exterior walls of large buildings.

Daniel Stone, Barton Malow’s director of innovation, is hopeful that increased use of technology could help stem a long-standing labor shortage, caused by the aging out of baby boomers and the industry’s reputation as dirty and dangerous.

“A job where you’re going to blow out your shoulders in a few years lifting masonry blocks, that’s a hard sell,” he said. “We’re trying to attract a wider range of people to the trades, people who are interested in technology. When you take the burden of lifting off people, we can attract new workers and also enable our current workers to extend their careers.”

For the education component, Menassa’s team is working with construction unions, community colleges and others to develop training courses that can teach workers how to work with robotic assistants.

“The future of construction work in particular is a win-win-win only if it is a fruitful human robot partnership and collaboration moving forward,” said Vineet Kamat, a co-principal investigator on the project and a professor of civil and environmental engineering at U-M. “So we’re working to adapt current training methods, which use a combination of classroom training and jobsite training with a master construction worker who provides hands-on training.”

Kamat explains that the new training might include training in a classroom, on the jobsite and in a lab, where humans could interact with robots in a virtual reality environment. The virtual reality experience is key, since in the future, human and robot workers may not always be located in the same physical space. By combining on-site and virtual training, workers can learn how their virtual commands translate into physical action and how to work with a robotic assistant to improvise when things don’t go as planned.

The research is funded by the National Science Foundation Future of Work at Human-Technology Frontier program. Additional co-principal investigators on the project are Joyce Chai, U-M professor of electrical engineering and computer science, and Wes McGee, U-M associate professor of architecture and material performance. Other researchers on the project include Honglak Lee, U-M associate professor of electrical engineering and computer science; Jessie Yang, U-M assistant professor of industrial and operations engineering; Curt Wolf, managing director of the Urban Collaboratory in the U-M Department of Civil and Environmental Engineering; Denise Simmons at the University of Florida and Olusola Adesope at Washington State University.